Digital Marketing done right means delivering the proper environment setup with tools that give you a constant flow of measurable data with the main objective of improving final conversion rates. If we’re talking about CRO (Conversion Rate Optimization) be it CTR (Click through rates) or CVR (conversion rates) A/B testing is a vital part of this optimization process.

What is A/B Testing?

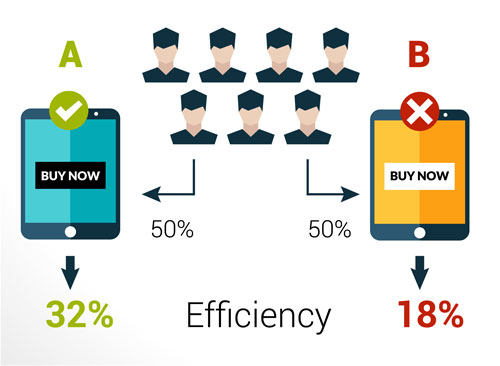

A/B testing is an experiment we run in a controlled environment to test two similar sets of data against user interaction (visitors). The scope is to see which of the variations yields a better outcome from the conversion percentage perspective.

Examples, types of A/B Tests:

Testing Paid Ads:

1. Two audiences: deliver the same ad creative in parallel to two different audiences (Audience “A” vs Audience “B”)

2. You can test the Creative (Ad Creative “A” vs Ad Creative “B”)

3. You can test the ad Copy (Ad Copy “A” Vs Ad Copy “B”)

Landing Page test

Landing Page A vs Landing Page B

Why A/B test?

Guessing vs The scientific approach to conversion optimization. A/B testing uses automated tools that make sure users are split in two and each user sees the same version, be it variation A or Variation B, each time they visit the landing page or ad environment.

How to A/B test?

The most important aspect is to strike the right balance between: “keep it simple”, “keep it relevant”.

Keep it simple means know what you’re testing and why you're testing it before you test.

Let us go back to the Paid Ad example and imagine you’ve set up your first ad.

Variation A : You have your audience, your creative, your copy.

Variation B: you change the audience, you change the copy, do some tweaks to the creative as well.

You start the test and see that Variation B is the winner by a margin of 10% -15%. Great!

Scrap A and continue running B variation.

Can you say why B did better than A?

Was it the audience? Was it the creative change? Maybe the combination? Can you draw a proper conclusion from this test and scale it in the next ads? No, you can’t and you can easily draw the wrong conclusions.

Could the Creative from variation A + the audience from variation B offer an even better increase in conversion? Maybe, maybe not.

This is why it is important to employ A/B testing best practices and take the granular approach to A/B testing. Test the audience in one test, the creative in a separate test, landing page modifications in a different test.

Then what does keep it relevant mean?

This applies especially to Landing Page A/B testing. There are a lot of webinars and so called prophets that advocate really small changes like a button color or small font size change have resulted in great conversion increases.

[ Variation A = Grey/ Variation B=Blue, source: Wordstream]

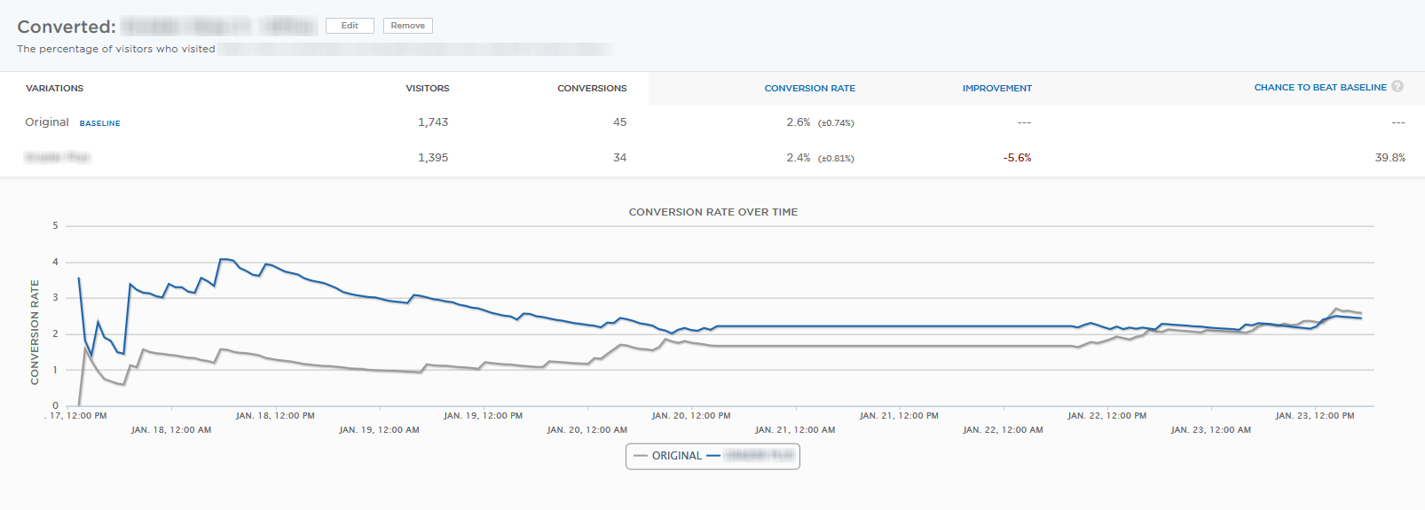

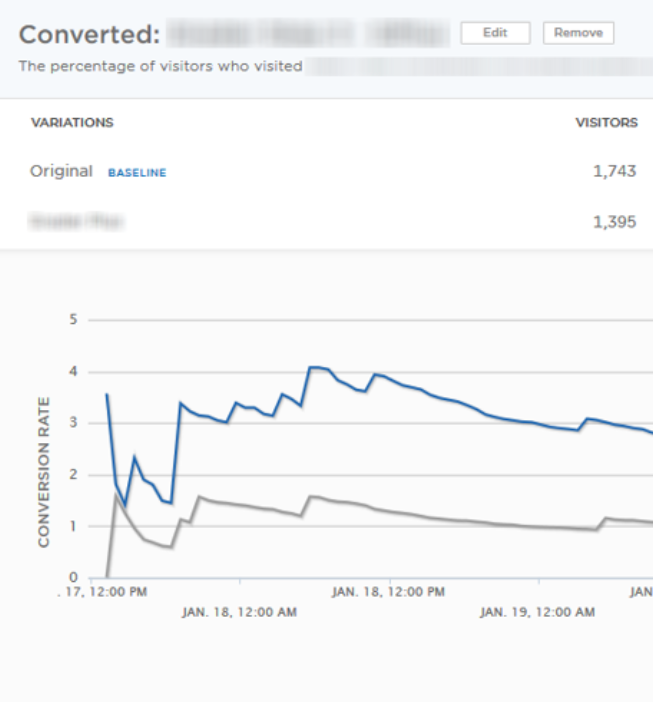

Spike in conversion is noticeable.

In reality, for 90% of cases, these are just short term spikes. We found by comparing hundreds of landing pages and research data provided by agency automation tools that a consistent change in USP (Unique Selling Proposal) packaging is needed to gain real lasting relevant conversion increase.